Method Overview

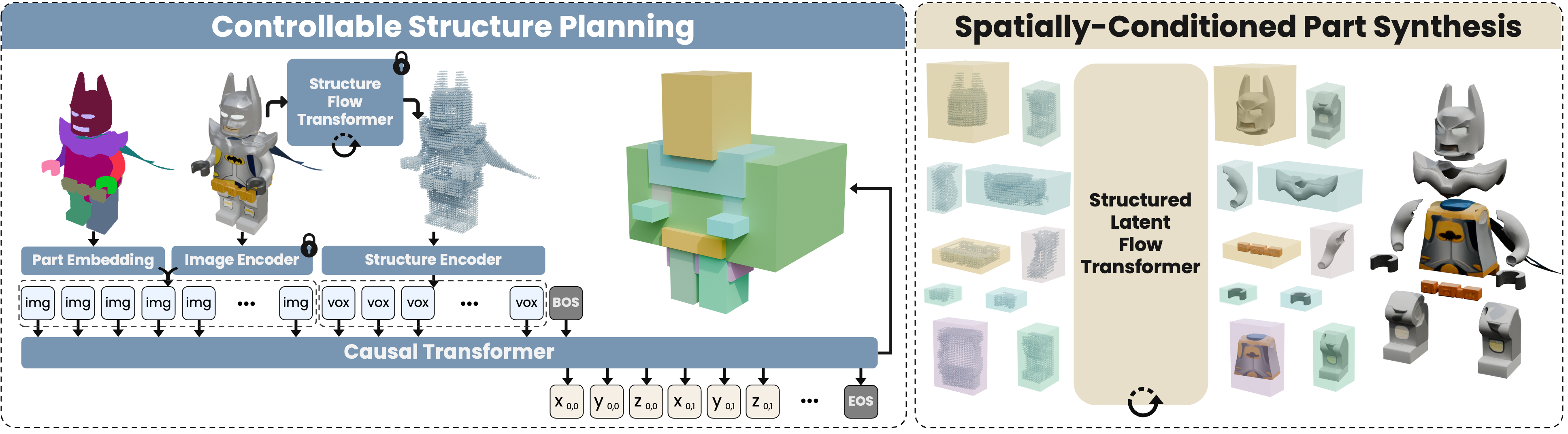

OmniPart generates part-aware, controllable, and high-quality 3D content through two key stages: part structure planning and structured part latent generation.

Built upon TRELLIS, which provides a spatially structured sparse voxel latent space, OmniPart first predicts part-level bounding boxes via an auto-regressive planner.

Then, part-specific latent codes are generated through fine-tuning of a large-scale shape model pretrained on overall objects.

OmniPart generates part-aware, controllable, and high-quality 3D content through two key stages: part structure planning and structured part latent generation.

Built upon TRELLIS, which provides a spatially structured sparse voxel latent space, OmniPart first predicts part-level bounding boxes via an auto-regressive planner.

Then, part-specific latent codes are generated through fine-tuning of a large-scale shape model pretrained on overall objects.

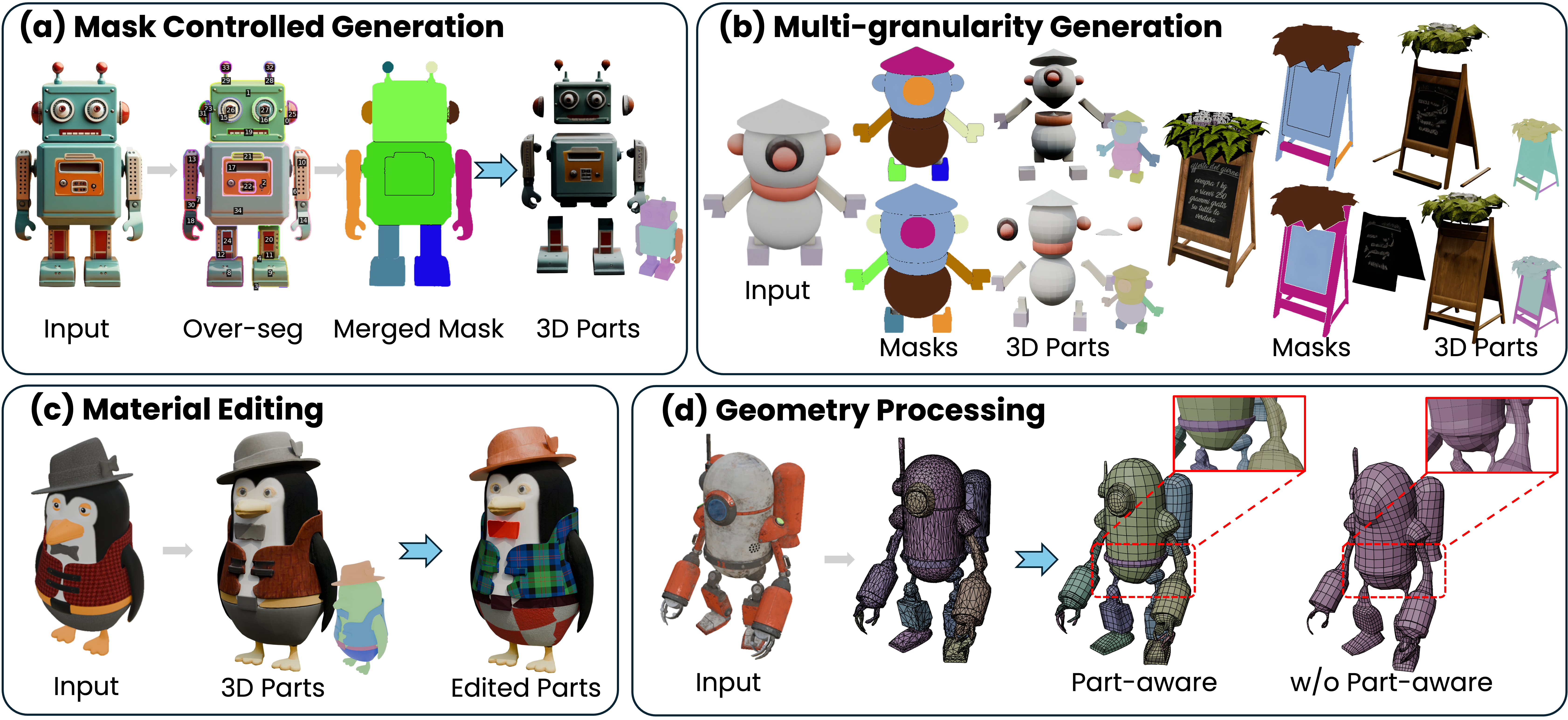

OmniPart generates high-quality part-aware 3D content directly from a single input image and naturally supports a range of downstream applications, including Animation, Mask-Controlled Generation, Multi-Granularity Generation, Material Editing, and Geometry Processing.

OmniPart generates high-quality part-aware 3D content directly from a single input image and naturally supports a range of downstream applications, including Animation, Mask-Controlled Generation, Multi-Granularity Generation, Material Editing, and Geometry Processing.